OpenAI Tech Stack: A Comprehensive Exploration

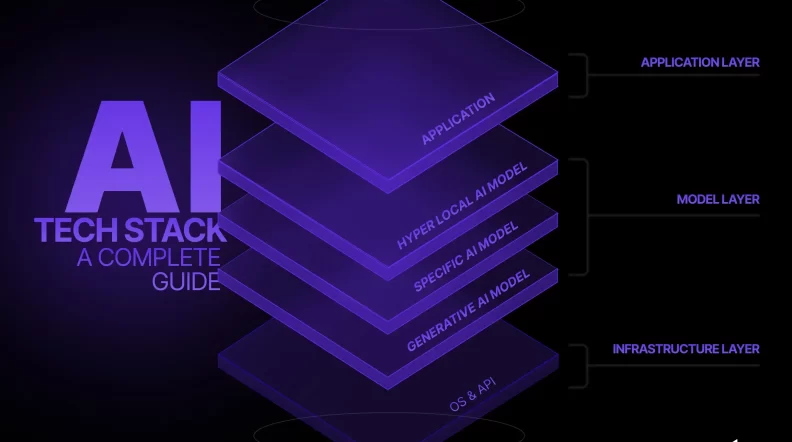

OpenAI’s technology stack is a complex and sophisticated assembly of tools and frameworks designed to develop and support advanced artificial intelligence (AI) systems. At its core, the tech stack encompasses a variety of programming languages, software development methodologies, and application programming interface (API) management processes. These elements work in harmony to create AI models capable of understanding and generating human-like text, processing audio files, and more.

Within this stack, OpenAI leverages cutting-edge language models that form the backbone of its generative AI capabilities. These models are trained on vast datasets, enabling them to produce outputs that closely mimic human language and thought processes. The use of an active directory further enhances team collaboration and resource management, ensuring that development efforts are streamlined and effective.

The stack’s versatility and depth empower OpenAI to push the boundaries of what’s possible in AI, driving innovation and setting new standards for machine learning and automation. From chatbots to more complex predictive models, OpenAI’s tech stack is at the forefront of generative AI development.

Understanding the Foundation of OpenAI’s Technology

At the foundation of OpenAI’s technology lies a selection of programming languages that are pivotal in building and maintaining its AI models. These languages are chosen for their flexibility, efficiency, and support for advanced machine learning concepts. They enable developers to construct robust frameworks that are essential for training sophisticated AI systems.

Languages & Frameworks

Programming languages are the lifeblood of OpenAI’s technological advancements. By utilizing languages that support high-level computations and data processing, OpenAI enhances its ability to develop complex AI models. These languages, coupled with dynamic frameworks, provide the necessary environment for experimenting with and deploying generative AI applications.

The Building Blocks of OpenAI’s Generative AI

OpenAI constructs its generative AI systems using a blend of proprietary and open-source components. Central to this are the advanced language models that have the capacity to learn from and interpret vast amounts of textual data. This allows them to generate coherent and contextually relevant text based on the input they receive.

Moreover, these models are continually refined and improved through iterative training processes, incorporating feedback loops that enhance their accuracy and reliability. This ongoing development cycle ensures that OpenAI’s AI systems remain at the cutting edge of generative technology.

Development Tools and Environments

Software development tools and environments are integral to OpenAI’s ability to innovate and iterate on its AI models rapidly. These tools facilitate coding, testing, and deployment, making it easier for developers to bring their ideas to life. The choice of environments is tailored to support the complex algorithms that underpin OpenAI’s AI systems.

Empowering Innovation and Efficiency

The strategic selection of development tools accelerates the creation and refinement of AI models. By providing a robust suite of software development tools, OpenAI enables its engineers to focus more on innovation and less on the intricacies of the development process. This empowers them to experiment freely and push the boundaries of AI technology.

Furthermore, these tools support collaborative workflows, allowing teams to work together seamlessly regardless of their physical location. This collaborative approach is critical in solving complex problems and fostering an environment of continuous learning and improvement.

Libraries and Application Hosting Solutions

OpenAI utilizes a range of libraries and application hosting solutions to bolster its computational capabilities. These resources provide the infrastructure necessary for running large-scale AI models efficiently. They also allow for the rapid deployment of AI applications, enabling OpenAI to bring its innovations to market swiftly.

Accelerating OpenAI’s Computational Capabilities

The use of specialized libraries enhances OpenAI’s ability to process and analyze data at an unprecedented scale. These libraries contain optimized algorithms that speed up computations, crucial for training complex AI models. Additionally, application hosting solutions ensure that these models are accessible and scalable, catering to the needs of a global user base.

Together, these components form a powerful ecosystem that supports the demanding requirements of generative AI development. They enable OpenAI to manage its resources effectively, ensuring that computational power is available where and when it’s needed most. This accelerates the pace of AI research and development, pushing the boundaries of what’s possible in the field.

The Core Components of OpenAI’s Infrastructure

OpenAI’s infrastructure is built on a foundation of data stores and application utilities that manage and process massive datasets. This infrastructure supports the entire lifecycle of AI model development, from initial training to deployment and scaling. It’s designed to be robust, scalable, and secure, meeting the high demands of cutting-edge AI research.

Data Stores and Application Utilities

The backbone of OpenAI’s infrastructure includes data stores and application utilities that handle vast amounts of information. These systems are optimized for speed and efficiency, ensuring that data can be accessed and processed quickly. They are crucial for training AI models on large datasets, a fundamental aspect of developing accurate and reliable generative AI.

Managing Massive Datasets for AI Training

OpenAI’s AI models are trained on datasets comprising billions of data points. Managing these massive datasets requires sophisticated data stores that can handle the volume, velocity, and variety of data involved. These stores provide the foundation for AI training, enabling models to learn and adapt based on real-world information.

Application utilities further streamline the training process, automating tasks such as data cleaning, preprocessing, and analysis. This automation allows researchers to focus on model development and optimization, significantly reducing the time and effort required to train AI models.

Monitoring and Analytics Tools

To ensure the performance and scalability of its AI systems, OpenAI employs a suite of monitoring and analytics tools. These tools provide real-time insights into system health, performance bottlenecks, and usage patterns. They are essential for maintaining the reliability and efficiency of AI models in production environments.

Ensuring Performance and Scalability

Monitoring tools allow OpenAI to detect and resolve issues proactively, minimizing downtime and ensuring that AI applications remain available to users. Analytics tools, on the other hand, offer valuable data on user interaction and system performance. This information is critical for optimizing AI models and infrastructure for better scalability and user experience.

Together, these tools form a comprehensive monitoring and analytics framework that supports OpenAI’s commitment to delivering high-quality, reliable AI services. They enable continuous improvement, ensuring that OpenAI’s AI systems meet the evolving needs of users and the industry.

Collaboration and Communication Technologies

OpenAI leverages advanced collaboration and communication technologies to facilitate teamwork across its global operations. These technologies, including an active directory, enable seamless communication and resource sharing among team members, regardless of their physical location. They are vital for coordinating development efforts and fostering a culture of innovation.

Facilitating Teamwork Across Global Operations

The use of an active directory streamlines access management and resource allocation, making it easier for teams to collaborate on projects. This centralized approach to identity and access management ensures that team members have the necessary permissions to access tools and data, enhancing security and efficiency.

Additionally, communication technologies such as messaging platforms and video conferencing tools keep teams connected, enabling real-time collaboration and decision-making. This global network of collaboration and communication tools is crucial for OpenAI’s ability to operate cohesively and innovate at scale.

The Impact of OpenAI’s Tech Stack on Generative AI Development

The development of generative AI has been remarkably advanced by OpenAI’s robust tech stack. This foundation has enabled groundbreaking progress in creating models that can generate text, images, and even code, transforming how machines understand and interact with the world. OpenAI’s approach, blending advanced neural networks, sophisticated AI techniques, and prompt engineering, has pushed the boundaries of the capabilities of AI systems, setting new standards for what AI can achieve.

Generative AI Breakthroughs Powered by OpenAI’s Tech Stack

OpenAI’s tech stack has been the backbone behind several generative AI milestones. The seamless integration of languages such as C, powerful development tools, and the OpenAI API has facilitated the creation of AI models that can generate human-like text, contribute to data science, and offer solutions across various sectors. This tech stack not only supports the development of such models but also ensures they can be safely deployed to the world.

From ChatGPT to Advanced Modeling Techniques

The introduction of ChatGPT, a model proficient in understanding and generating natural language, marked a significant leap in AI capabilities. This achievement was made possible by leveraging OpenAI’s comprehensive tech stack, which includes cutting-edge neural networks and sophisticated natural language processing techniques. The foundation laid by these technologies has enabled further advancements, pushing forward the development of more complex and versatile AI models.

Following ChatGPT, OpenAI’s tech stack has continued to support the evolution of generative AI, facilitating the exploration of advanced modeling techniques. This includes the development of foundation models that serve as the base for building specialized AI systems. With each breakthrough, OpenAI’s tech stack proves to be a critical asset in expanding the capabilities of AI systems, making it possible to tackle more challenging problems and explore new applications.

The Role of OpenAI’s Infrastructure in Advancing AI Research

The infrastructure maintained by OpenAI has been instrumental in propelling AI research forward. By providing a robust platform that supports a wide range of AI initiatives, OpenAI’s infrastructure allows researchers to experiment, innovate, and push the boundaries of what AI can achieve. This foundation is crucial for the ongoing development and refinement of AI models, ensuring that AI research can continue to advance at a rapid pace.

Supporting a Diverse Range of AI Initiatives and Projects

OpenAI’s infrastructure is designed to support a diverse array of AI initiatives, from foundational AI research to the development of practical applications. This includes providing resources for extensive data analysis, model training, and the deployment of AI technologies. With access to such a comprehensive infrastructure, researchers and developers can explore new AI techniques, refine existing models, and contribute to the broader field of AI research.

Moreover, this supportive environment fosters collaboration among researchers across the globe. By facilitating teamwork and communication, OpenAI’s infrastructure helps to accelerate the pace of innovation in AI. It enables the AI community to share insights, tools, and methodologies, thereby enriching the entire ecosystem of AI research and development. This collaborative approach is essential for tackling complex challenges and achieving significant advancements in AI.

The Future Powered by OpenAI’s Technological Innovations

Looking ahead, the future of AI is set to be profoundly shaped by the technological innovations emerging from OpenAI. With a tech stack that continuously evolves to incorporate the latest advancements in AI research and technology, OpenAI is poised to lead the charge in exploring the next generation of AI applications. From enhancing natural language processing to expanding the boundaries of the capabilities of AI systems, OpenAI’s commitment to innovation promises to unlock new possibilities and safely deploy them to the world.

Embracing the Next Generation of AI with OpenAI’s Tech Stack

The next wave of AI development hinges on the strength and flexibility of OpenAI’s tech stack. As AI technologies advance, OpenAI’s infrastructure offers the tools and resources necessary for researchers and developers to embrace these changes. The continuous improvement of this tech stack ensures that OpenAI will remain at the forefront of AI research, driving the exploration of uncharted territories in AI capabilities and applications.

Anticipating the Evolution of AI Technologies and Their Applications

The progression of AI technologies is accelerating, and OpenAI’s tech stack is uniquely positioned to support this rapid evolution. By fostering a culture of innovation and providing a robust platform for AI research, OpenAI is helping to shape the future of AI applications. From advanced data science techniques to breakthroughs in neural networks, the potential for transformative impacts across industries is immense.

As AI continues to evolve, OpenAI’s tech stack will play a vital role in enabling the development of AI models that can solve complex problems, enhance human capabilities, and create new opportunities for progress. The anticipation of how AI technologies will transform our world underscores the importance of OpenAI’s contributions to the field. Through its technological innovations, OpenAI is not just advancing the state of AI research but also paving the way for the future applications of AI in society.